You never know what’s going to happen when you click on a LinkedIn job posting button. I’m always on the lookout for interesting and impactful projects, and one in particular caught my attention: “Far North Enterprises, a global fabrication and distribution establishment, is looking to modernize a very old data environment.” I clicked the button, and almost immediately received notification that a new email from [email protected] had arrived. “That was fast,” I thought. Inside the email was an invitation to find out more about the project, and a Zoom link for a meeting scheduled within the hour. It also included apologies for the short notice and for not meeting face-to-face. The project was already running behind and the in-person logistics would be difficult. (I later came to believe that the latter wouldn’t necessarily have been an insurmountable obstacle.)

In no time I was on a call with a group of Scandanicelandic-looking technical architects. They told me that my combination of large project and large data management experience was just what they were looking for. I was flattered that they had read my blog, as well as the recently published 6 Secrets for Delivering Impossible Projects.

They then introduced the project: modernizing Santa’s Naughty-Nice List.

For background they referred me to several documentary films and historical texts, most of which were familiar. We arranged to meet again the next morning. I already had most of the sources–what I didn’t have they provided–and did my homework overnight.

When we reconvened, they began by explaining that their operations had been relying on the existing system for more than half a century. Someone mentioned punch cards and they chuckled over their shared recollection of an apparently unfortunate cotton candy incident. I had no doubt that COBOL featured prominently on all of their resumes. Nevertheless, they were well versed on modern architectures as well as data and analytics methodologies.

They described three high-level business processes:

- Event Collection and Evaluation

- Classification Aggregation and Prediction

- Location Tracking and Verification

I was looking forward to digging into the details; in particular the data sources, volume, and utilization.

Event Collection and Evaluation

Their surveillance operation is the envy even of the NSA. “He sees you when you’re sleeping” and “knows when you’re awake.” That’s pretty comprehensive, and maybe a little bit creepy.

I was reminded, though, that as a child, going to sleep can be scary: nightmares, monsters in the closet and under the bed, and who knows what all creeping around your bedroom without you knowing. When faced with such an array of perils, it can be comforting to know that you’re not alone and that someone who cares for you is watching over you while you’re sleeping.

Event information is collected from myriad sources: cameras, microphones, elves on shelves, satellite imagery, website tips, parent and teacher hotlines, department store Santas, and, for some of the more difficult cases, agents clandestinely dispatched to observe.

Continuous data streams are divided into discrete candidate Naughty-Nice events through a process called chunking. It is very labor-intensive and requires special training to ensure reliability and consistency in determining event boundaries. Technology has facilitated this process tremendously, and now manual chunking is only done for quality control. I was impressed by their embrace of AI throughout the operation. Not everything that happens in an individual’s life is captured. This initial screening is a judgment call about whether the event potentially qualifies as Naughty or Nice. No final determination yet, but worth taking a look at.

I started doing the math in my head about the disk space required to capture and the network bandwidth required to transmit these events. Assuming 50 megabytes per minute of video and an average of ten minutes of video per day per person, that’s about 180 GB per year per person. That’s a huge amount of data to consolidate and store for everyone, but it’s not so bad as long as the processing and storage are at the edge. Like an IoT system. I learned that the detailed data is kept primarily for appeals and litigation and is only rarely accessed. Taking a few extra seconds to pull back the data when it’s needed is fine.

A quick word about Data Security. The information that Far North Enterprises collects can be very sensitive, and they are not immune from cyberattacks. Extreme care is taken to secure the data both in motion and at rest. They call their security team the “Men in Black” because you don’t want to know what they’re seeing. And obviously, the perpetrators are written into the Naughty List.

The event is then described using a standard glossary of terms. This all used to be done by hand and, like chunking, was labor-intensive and required special training. Today, AI transcription and description services are sufficiently reliable. Mistakes do still occasionally occur, and the more consequential episodes receive special attention and review.

Each event description is passed along to a decision process that determines whether the event gets categorized as Naughty, Nice, or Neutral. After all, “He knows if you’ve been bad or good.” This decision process is very proprietary.

I did learn that massive neural networks are being trained to differentiate Naughty and Nice, but only on an experimental basis. They did seem encouraged by the early results. Broader adoption will depend upon resolving three common neural network challenges: building confidence in the decision process, accepting that neural networks are black boxes producing decisions that are not generally explainable, and determining whether to push the massive models to the edge or move massive amounts of event data to the model.

Since Santa’s operation is Christmas-oriented, I expected the criteria for event categorization to align with Christian ethics, though I couldn’t get confirmation. I did verify that babies are always on the Nice List, and that they do not use sampling (which is good because it would be just like me to be having a rough go of it on my sampled day).

I was very curious about sins of omission vs. sins of commission. For example, let’s say a classmate is feeling sad. Choosing to sit down beside her to console her would be a good candidate for Nice. But what if you saw her and chose not to do anything? Would that be considered Naughty? They wouldn’t answer hypotheticals, but it was strongly suggested that it is best to take opportunities to be Nice as they present themselves.

Questions have arisen recently about the morality of Santa’s List itself. To many parents, misbehaving children aren’t ever Naughty. Pulling the legs off that spider isn’t Naughty, it’s a child exploring the world around him. Running wildly around the tables at the restaurant isn’t Naughty, it’s a healthy expression of childhood exuberance. They believe that expecting children to be constrained by ethical norms is stifling, and tying gifts from Santa to one’s adherence to these norms is emotional blackmail. Nevertheless, his is a private, non-governmental, volunteer enterprise. His rules. It was mentioned, though, that the staff allocated to customer service and claims has increased significantly in the past generation.

Clearly the Event Collection and Evaluation process is highly distributed and occurs at the edge. There’s no reason to consolidate either the data or the processing. When an event is characterized as Naughty or Nice, it is wrapped up (with a bow) and delivered to a regional repository.

Classification Aggregation and Prediction

The ultimate deliverable is, of course, Santa’s List, with data from the regional repositories aggregated into a single binary result for each individual.

Consolidating the data from the multiple edge nodes seemed to me to pose a significant Master Data Management challenge: specifically, matching events at different times and places with the correct individuals. An entire industry has emerged around recognizing when Bill Jones and William Jones are the same person. Not a problem, I was told, because events are associated with inherent characteristics of the person. Fingerprints, earlobe shape, or retina print maybe? Maybe. Trade secret.

When the conversation turned to aggregation and categorization, my first guess was that a ledger is maintained throughout the year with a balance of weighted Naughty and Nice events. A single large Naughty event scoring, say, -10 could be more than offset by four slightly Nice events at +3 each. Or maybe they use a decision tree or another neural network.

Social media provides another wrinkle. Paradoxically, as much as social media feeds a torrent of information about others, we often feel more disconnected and the overwhelming number of these “interactions” are Neutral. But are you being Nice when you reflexively click the Like button below a friend’s photograph? Are you being Naughty when you make a snarky reply to a post that turns out to have been composed by a bot? More answered hypotheticals. Just be nice.

A categorization based on the information at that specific Regional Repository is stored locally along with key components of that calculation.

A continuous current categorization status for each individual is not needed. Santa just checks The List twice: once around Thanksgiving, and a second time on Christmas Eve (mainly to review any last-minute changes). Data in the Regional Repositories is accessed through federated queries, with as much of the processing pushed down as possible.

But just like the operational data generated by most businesses, event data is used here for additional purposes. The most critical of these is to support predictive analytics for scheduling toy fabrication. The Fabrication Team can’t wait until the preliminary Naughty-Nice list is generated in November to know for whom they’re making presents. Instead, once a month the event data is gathered from the regional repositories and a confidence score is calculated to identify those children most likely to be categorized as Nice. The higher the likelihood, the earlier in the year fabrication is started. Many Nice determinations are made at the very last minute. Many of us remember the urgency of improving our behavior once we start seeing Christmas decorations around town. It gets extra-busy in December, but that’s normal. It is also possible to flip from Nice to Naughty, necessitating the removal of a previously prepared gift. Fortunately, this is extremely rare, but it does happen.

These predictive analyses require as input much more than just the single binary result for each individual. It also requires historical data from previous years. The more history the better. It’s a familiar conversation. One side wants all of the data forever, and the other is trying to manage cost, maintenance effort, and risk. It’s also a familiar compromise. Detailed data stored for a couple years then summarized and “skinnied” farther back in history.

Since the final result is just a binary choice, it might be tempting for the operational systems not to worry about Data Quality. This is clearly not true. Data Quality is a top priority. Nobody ever says, “The data is good enough for Santa’s List, it’s good enough for you.” Nobody wants their bad data to be the cause of an error or miscategorization in any process anywhere. That would be Naughty.

“But what if someone firmly on the Nice List discovers something that they want for Christmas later in the year, long after fabrication had been completed for them.” I asked. They looked at me with an expression that had they been from further south would have said, “bless his heart.” If they could have patted my head through the Zoom call screen they would have. Suffice to say that specific gift assignments can be adjusted throughout the year. Nobody gets shortchanged for being predictably Nice.

Location Tracking and Verification

Researchers have analyzed Santa’s route, speed, payload weight, reindeer horsepower, and caloric intake. We all take for granted that he delivers to children around the world, but nobody seems to wonder about how he knows where to go. How he knows where each individual on The List will be on Christmas Morning. The answer is a data collection effort that rivals that of the Naughty-Nice events.

Most families have consistent Christmas traditions and the history of each individual’s Christmas Morning location is maintained. In addition, Route Planning Location Services uses three primary data sources. The first is a baseline set of location details from postal address services like Pitney Bowes and Experian. These are certainly useful for identifying the parents’ residences, but not for those families that will be travelling. They’re not moving. They’re just not going to be at home. An address verification service won’t know about that. The second source is a set of real-time feeds from transportation providers including airlines, ships, trains, and hotels for any bookings that include Christmas Eve. Finally, the Naughty-Nice event collection is leveraged to identify and interpret Christmas Morning planning conversations. This detail made it clear to me that Santa keeps an eye not only on the children but on their parents as well. Again, this information can be very sensitive and care is taken to only capture what is absolutely needed and to ensure its security.

When a location event is identified at the Regional Repository, it is forwarded to the Central Repository in the Far North Data Center.

The following operational process is not well known and I had to get special permission to include it here: an Advance Team travels Santa’s route ahead of him to verify locations. Santa doesn’t have time for empty stops or for making a second circuit to correct errors. When a recipient is not where they are expected to be, an Operations Team back at headquarters finds them and reworks the route. Last-minute logistical adjustments and unexpected changes are expected.

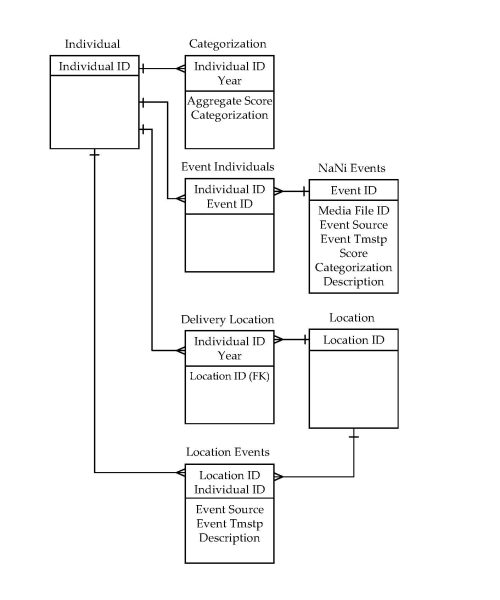

Data Model

Once I understood the business processes and solution components, I could start laying out a conceptual data model, including entities, key fields, relationships, and a few attributes.

The model is focused on the Individual. We talked for a long time about other names for that central entity. It seems that the longest data modeling conversations are about naming. We considered Party but that was too general, Customer was too antiseptic, and Believer and Child excluded certain people that would still be in the database.

Each Individual has a Categorization for each year, which includes their aggregate score and final Naughty-Nice determination. It is informally referred to as Santa’s List, even though the actual report generated Christmas Eve joins Individual, Categorization, and Delivery Location. Now, before you comment, I understand that conceptual data models typically don’t include summary tables, but this one is so central to the business purpose, in fact it is the business purpose, that it warranted inclusion.

A Naughty-Nice (NaNi) Event may involve multiple Individuals, and each Individual is associated with multiple Events. An associative table resolves the many-to-many relationship.

A current working Delivery Location is stored for each Individual through the year, with the actual location captured at the time of delivery. Changes are logged and analyzed for process improvement. Historical data, along with Location Events collected from transportation providers and Regional Repositories, are stored to determine the Delivery Location.

Additional entities live on the periphery and inform gift selection and distribution routing. These include regional and national customs, religious affiliation, relationships with parents and guardians and their contact information, airspace restrictions, regional topology, etc. Most of these are individual lookup tables or small clusters of related tables. Those structures were worked out long ago, are still working fine, and I was told that I didn’t need to worry about them.

They showed me the metadata repository for their current system, and I was very impressed by its completeness. Descriptions, expected content, and security requirements at minimum are defined for everything. I have no doubt that the new environment will be similarly well documented.

Database Sizing

The key metrics for database sizing are the number of individuals on The List and their locations, the expected average number of events collected for each individual, and the required depth of history.

Individual:

The focus of Santa’s list is almost exclusively on the children, but we know that some adults are on it, too. Consider the scene from the end of Frosty the Snowman (one of the previously mentioned documentary films) where Santa warns Professor Hinkle, the magician, that, “If you so much as lay a finger on the brim [of the magic hat], I will never bring you another Christmas present as long as you live.” Admittedly, the number of adults is small, but those that truly believe and can hear the sleigh bell introduced in The Polar Express are on The List and in the database.

Several studies have examined the probability of a child believing in Santa Claus by age. The results published by Max Candocia are typical: by eight years of age, a child is only 50% likely to still believe, and by fourteen that number is close to zero and remains flat.

But what about the total number? A 2019 analysis of Santa’s Christmas Eve delivery task completed by Philip Bump and published in The Washington Post placed the number of individuals to whom Santa delivers at about 550 million, living in about 400 million households. Another study from a few years earlier estimated roughly 525 million. But this just represents the number of individuals on the Nice list.

The length of the Naughty List is a little more elusive. The assumption that if one is not on the Nice list then they are Naughty is incorrect. Both categories of events are collected for both categories of individuals, so everybody has to appear in the database. We could take the total number of children under the age of fourteen in the countries to which Santa delivers. That would probably get us pretty close, with those over fourteen being a rounding error. But as an initial, conservative estimate, let’s assume a 50/50 split (it is generally accepted that the Nice far outnumber the Naughty, perhaps by as much as 9 to 1). To be safe, we’ll use 1.1 Individuals and 800 million households in the active master list.

The last record count consideration is list maintenance. When you no longer believe in Santa Claus and can no longer hear the sleigh bell, your information is no longer needed in the active database. Of course, one might believe again and be “reinstated.” It’s rare, but it happens. The analysts, of course, would prefer that data be kept indefinitely. A compromise was reached where the active list contains only those that still believed at the beginning of the year. All others are archived for 125 years. That should catch everybody.

Each individual is assigned a 16-byte GUID (Globally Unique Identifier). With over 2^128 different identifiers, collisions shouldn’t be an issue. Let’s say that with the name and other demographic information, a total of 300 bytes are required. Storing Individuals’ information will therefore consume about 310 GB. This is small enough that an initial seed copy can be pushed out to the Regional Repositories, with a Master Data Management system coordinating the distribution of changes.

Assuming that 7% of the individuals roll off each year, the 125-year archive would be expected to be about 9 times the size of the active list or 2.8 TB. Even doubling that twice to accommodate population growth is still an entirely manageable number.

Categorization:

The categorization, scoring metrics, and individual identifier are stored for each year one is on The List. Assuming 70 bytes per Individual per year for 14 years and the same archive 9x scaling factor, active Categorization will use 1 TB, and its archive 9 TB.

Events:

Even though I don’t need to worry about storing the raw event media, summarized event information as well as pointers to the event media are associated with the Individual. File references are recorded in the Regional Repositories. Once described and categorized, very, very few of these media files are ever accessed. Only when there is a question, edge case, litigation, or appeal. It seems like a whole lot of time, expense, and effort for something that’s not going to be needed very often, but it’s critical that the information be available when it does happen. In addition, the data is useful for quality and training purposes.

The number of opportunities that an individual has to be Naughty or Nice on a given day varies tremendously. Besides the usual variables like personality, social setting, and age, an increasing number of interactions are digital rather than personal.

A Statistica study of daily digital data interactions per connected person worldwide found that the number increased from roughly 300 in 2010 to 1,400 in 2020, and is projected to approach 5,000 by 2025. Many more than when we interacted with each other mostly in person.

Let’s assume an average of 300 classifiable Naughty-Nice interactions per day. That seems like an awful lot, but you’ll notice that they add up when you start paying attention. Each event includes a description, categorization, detailed metrics used for scoring, timestamp, source, and the identifier for the media file. In addition, a link to the individual is stored in an associative table. Let’s assume that all of that requires 300 bytes for a single interaction (again, probably an overestimation). That works out to 32 MB per person per year. Double that because events from both the current and previous year are stored. Now, multiply that by a billion individuals and that comes to 65 petabytes. Now we’re getting into some pretty big numbers. Of course, it’s distributed so not all of that space is needed in the same place. Furthermore, nearly all of the data elements are easily and highly compressible so it seems that reducing the size by a factor of five is doable, bringing the size down to about 13 petabytes.

This data is stored as long as the individual is in the active database, or 15 years whichever is longer. That’s nearly 200 petabytes. Probably need to go back and do some compression studies to get that number down some more. Deduplication might help as well.

Locations:

The ISO 19160-1 address specification does not mandate field lengths. Using the maximum number of possible characters for each address component would require more than 1000 bytes for each address. By using variable length character strings, we won’t be storing anywhere close to that maximum. Nearly all addresses are less than 100 characters, meaning that even a billion addresses would require just 93 gigabytes.

Someone on the call asked whether that number could be reduced farther by normalizing the address components. That’s an idea, but in practice the component identifiers require more space than the actual values themselves. Best just to leave it denormalized (but multi-value compression can be used to reduce the table size).

The associative table linking Location and Individual requires 36 bytes per Individual per year. Assuming 14 years on the active list and the same 9x archive scaling, location data requires 470 GB and Archived Location data 4.1 TB.

Finally, the Location Events are similar to the Naughty-Nice events. Many fewer are stored because the focus is on determining where an individual will be on a single day. Instead of 300 events per day, they get an average of less than 10 total. These events are also kept for two years for research purposes, for a total of 3 TB.

The resulting database sizing was pretty much as expected:

- 1.7 TB for Main tables (Individual, Categorization, and Delivery Location)

- 15.9 TB for Archive tables (Individual, Categorization, and Delivery Location)

- 13 PB for Event tables (Naughty-Nice and Location) – Getting this last number down is a key project focus.

I presented my thoughts to the team and the feedback was overwhelmingly positive. They said that they could take it from there. I’ll admit I was disappointed that I wouldn’t be participating in the project through to its conclusion, but I was honored to be asked to consult at all.

Just before that final meeting concluded, I asked if I could share the experience through my blog. They said that it was fine, but that the story would have to be reviewed and approved first. There might need to be some redactions before it goes public. I was concerned but they told me not to worry about it. They told me not to worry about a lot of things. They said that I wouldn’t remember what had been redacted. I wouldn’t even notice.

If you have any suggestions or improvements, please let me know and I’ll pass them along.

In retrospect, the whole thing was pretty basic and I don’t think they really needed my help at all. Maybe they were told to get outside eyes. Maybe they just wanted to share.

And maybe somewhere, probably in a cloud and certainly someplace cold, there’s a newly reactivated database entry.

Maybe several.

Maybe that’s why.

Featured Image: rdj73, “Checking the List,” at deviantart.com. Some rights reserved.